Section: New Results

Bayesian Perception

Participants : Christian Laugier, Jean-Alix David, Thomas Genevois, Blanche Baudouin, Jerome Lussereau, Lukas Rummelhard [IRT Nanoelec] , Tiana Rakotovao [CEA since October 2017] , Nicolas Turro [SED] , Jean-François Cuniberto [SED] .

Conditional Monte Carlo Dense Occupancy Tracker (CMCDOT)

Participants : Christian Laugier, Jean-Alix David, Thomas Genevois, Blanche Baudouin, Jerome Lussereau, Lukas Rummelhard [IRT Nanoelec] , Amaury Nègre [Gipsa Lab] , Nicolas Turro [SED] .

The research work on Bayesian Perception has been done as a continuation and an extension of our previous research activity and results on this approach (This research activity has been started in the scope of the former Inria team-project e-Motion and it is now conducted (since 2015) in the scope of the Inria Chroma team). This work exploits the initial BOF (Bayesian Occupancy Filter) paradigm (see section 3.2.1) and its recent new formulation and framework called CMCDOT (Conditional Monte Carlo Dense Occupancy Tracker) [76]. More details about these developments can be found in the Chroma Activity Report 2016.

The objective of the research work performed in 2017 on this topic, was to further refine the perception models and algorithms. Refinements have been made at three different levels:

-

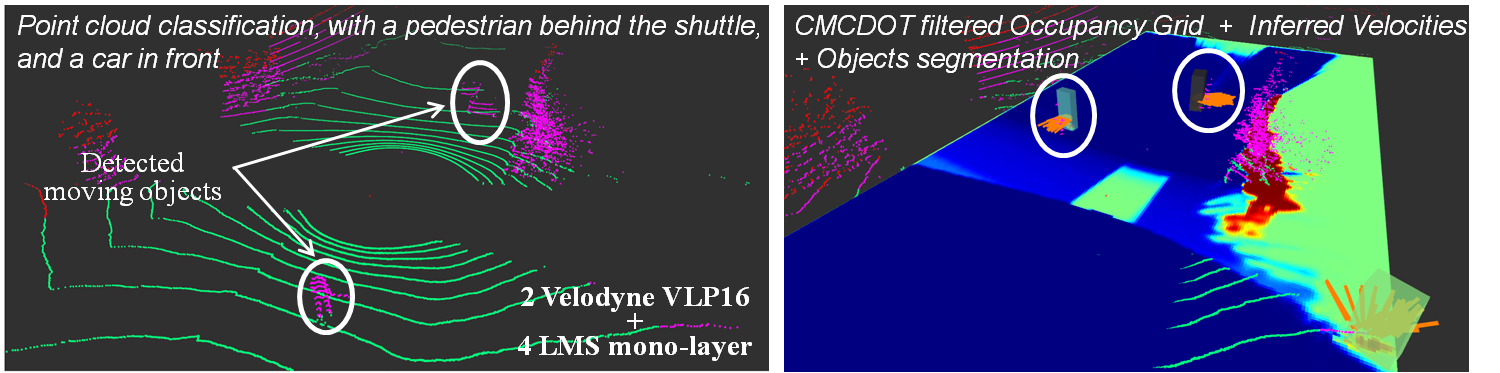

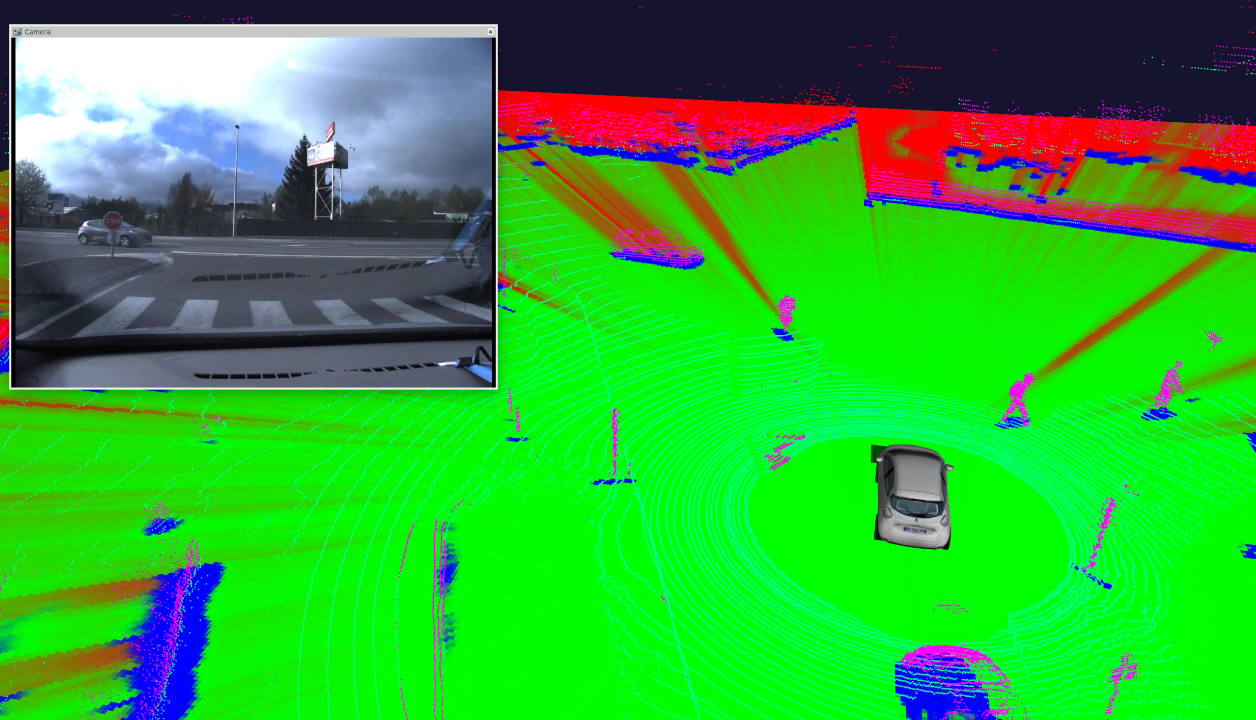

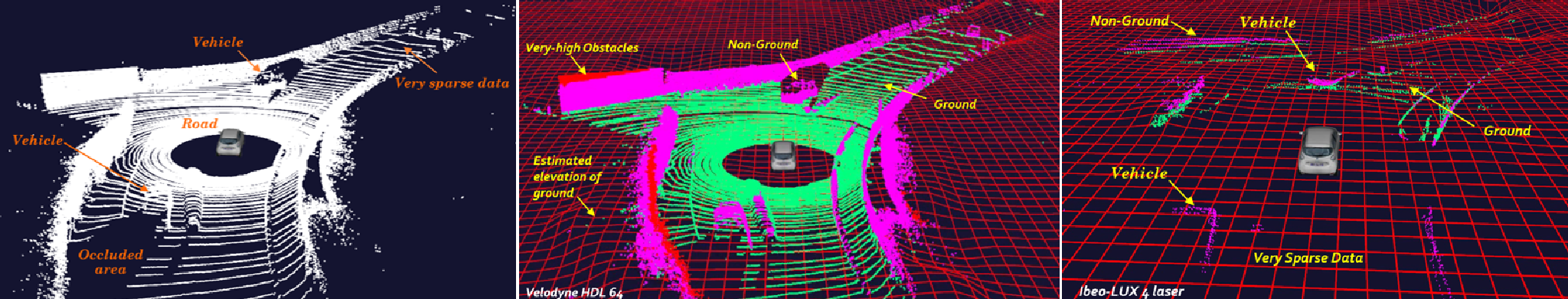

The occupancy grid generation process has been improved in order to appropriately process 2D and 3D lidars. In particular, the new sensor model for 3D lidar (called GEOG-Estimator), which was initially designed by the team in 2016, has been improved by adding a temporal filtering step for both obtaining a more accurate estimation of the elevation of the point cloud elements and a better occupancy grid generation with respect to the sensor calibration. This work has been published and patented in 2017 [24] [23]. The results are illustrated by Figure 6 and Figure 7.

The sensor model for 2D lidar has also been modified in order to adapt it to the new paradigm introduce with GEOG-Estimator, with the objective to obtain a better fusion of the occupancy grids.

Figure 6. Example of Occupancy Grid generated using the classified point cloud and the Ground Estimator model.

Figure 7. (a) Typical 3D point cloud generated by Velodyne LiDAR. (b) Point cloud segmentation between ground (green points) and non-ground (purple points), and estimated average elevation of the terrain (red grid). (c) Point Cloud Segmentation on 4-Ibeo Lux LiDAR data and estimated elevation of terrain.

-

A new approach for fusing several occupancy grids has been developed to appropriately merge the outputs of different sensors (2D/3D lidars, stereo cameras, ...),

-

The CMCDOT framework, which takes as input the merged occupancy grid, has been improved by adding new filtering equations whose objective is mainly to provide better results. In addition, a new output format has been added in order to make it easier the connection with the decision & control related components (see below).

All the above mentioned software modules are highly parallelizable. This is why a GPU implementation has been made and is continually optimized in order to obtain very efficient processing time and results. Now, the whole perception framework is able to run on low energy consumption embedded boards (Nvidia Jetson TX2). Thanks to this efficient embedded implementation, an industrial proof of concept on a commercial autonomous shuttle (from the EasyMile company) has successfully been done in a few weeks, Figure 8 illustrates.

|

These approach allow us to also develop a compact and portable demonstrator for conference or exhibitions, Figure 8(fourth picture) illustrates this technology.

Simulation based validation

Participants : Thomas Genevois, Nicolas Turro [SED] , Christian Laugier, Tiana Rakotovao [CEA since October 2017] , Blanche Baudouin.

In 2017, we have started to address the concept of simulation based validation in the scope of the EU Enable-S3 project, with the objective of searching for novel approaches, methods, tools and experimental methodology for validating BOF-based algorithms. For that purpose, we have collaborated with the Inria Tamis team (Rennes) and with Renault for developing the simulation platform that is used in the test platform. The simulation of both the sensors and the driving environment are based on the Gazebo simulator. A simulation of the prototype car and its sensors has also been realized. In the simulator, the virtual Lidars generates the same format of data as the real ones, meaning that the same implementation of CMCDOT can handle both real data and simulated data. The test management component that generates random simulated scenarios has also been developed. Output of CMCDOT computed from the simulated scenarios are recorded by ROS and analyzed through the Statistical Model Checker developed by the Inria Tamis team.

Within the project Perfect, it has also been decided to use Gazebo to build our simulator. Gazebo is a simulation framework which gives many tools to simulate physics, sensors and actuators. Moreover, as Gazebo is fully compatible with ROS, it becomes easy for us to connect the simulator with our own perception and control tools (which are all using ROS).

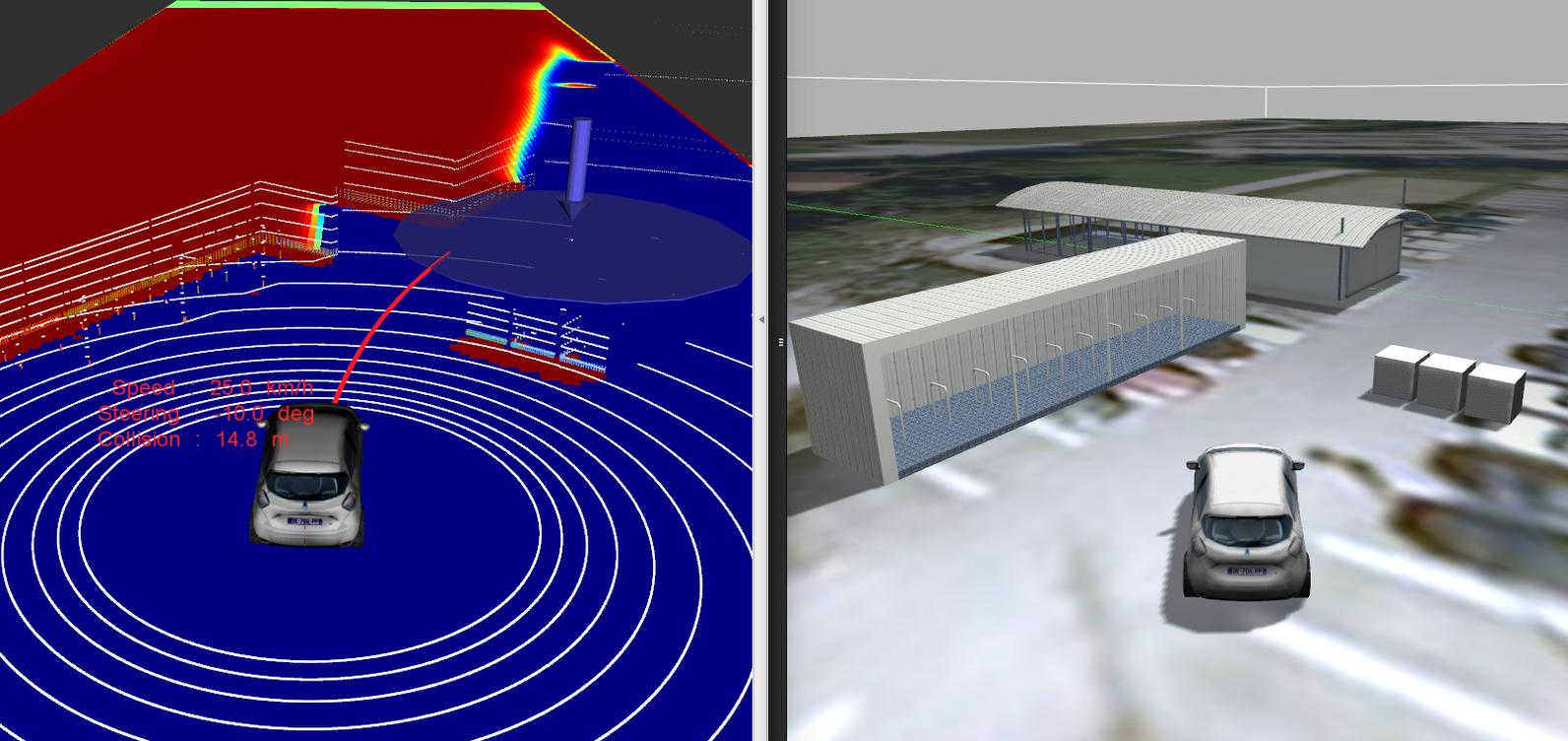

We have developed a Gazebo model of the Inria Renault Zoe demonstrator, including physical properties (friction, inertia, sliding), sensors models (lidars and odometry) and real-like actuators (steering, acceleration and brake), Figure 9(first picture) illustrates. All parameters have been tuned to match with the actual vehicle and its equipment. In addition, several plug-in programs allow us to drive the virtual vehicle with the same commands as the actual vehicle; similarly, the simulated sensors provide data in the same format as the actual sensors. Therefore the simulated vehicle behaves much like the actual car, and any program running on the actual car can be directly used on the simulated model without any adaptation. This simulation is almost real time which is very convenient for development purposes.

Thanks to this simulated model, we have safely developed control and navigation software (emergency braking, path following and local navigation with obstacle avoidance). During this work, the simulation helped us to detect all issues and fully debug the programs before executing actual experiments. It showed that our model is well designed to behave like the real Zoe. Figure 9 illustrates the graphic output of the system.

Future work aims at improving these models for obtaining faster execution, better low level physical simulation (engine, brake, suspension), and new types of simulation sensors (cameras, IMU, radar, etc.). An industrial partner has already shown direct interest to our simulation model and asked for an adaptation to his own vehicle (no details can be given since this work is confidential).

|

Control and navigation

Participants : Thomas Genevois, Christian Laugier, Nicolas Turro [SED] .

In January 2017, within a partnership with Ecole Centrale de Nantes, we realized a wired based control kit on the Renault Zoe experimental vehicle of the team.

We have first developed an emergency braking system to avoid collision during manual driving. This system triggers when the risk estimation provided by the CMCDOT reaches a threshold. Significant engineering work was necessary for being able to control the brake with the software, while letting the driver to drive normally. This has been successfully tested with both the simulator and the real vehicles in the PTL experimental platform of IRT-Nanoelec, Figure 10 illustrates.

We plan to improve this with different level of risk (long term, middle term and short term) which would trigger different actions (warning, progressive braking, emergency braking). To do so we will need to improve long term and middle term risk estimation reliability.

In a second step, we have started working on a safe local navigation component. For that purpose, we have implemented a Dynamic Window Approach (DWA) local planner based on occupancy grid. The DWA approach consists in computing online a set of feasible trajectories for the vehicle (in terms of vehicle control and no-collision in the near future). Then, a score is associated to each trajectory considering its collision risk, its heading with respect to the goal and its speed. Finally the trajectory with the best score is chosen and provided as a reference for the low level controller. The DWA technique affords a simple and flexible architecture and we took advantage of it to introduce the notion of time-to-collision within the score function. At the moment, the time-to-collision is computed from the occupancy grid provided by CMCDOT.

This approach has been successfully tested with in simulation and with the real vehicle on the PTL experimental platform of IRT-Nanoelec. It showed a good behaviour at slow speeds (<20km) on simple slalom and going through automated barriers (stops in front of the barriers and restarts when the barrier opens), Figure 10 illustrates.

Current and future work aims at improving the approach at two levels: (1) developing a parallel implementation in Cuda on GPU for drastically improving the computation time, allowing in this way to compute in real-time several shapes of trajectories (i.e. for obtaining a better global motion) and to consider higher velocities and dynamic objects (i.e. anticipating the future motion of the moving objects); (2) improving the low level controller and the dynamic model of the vehicle (e.g. for computing the future positions of the vehicle from its odometry and the applied control commands), for obtaining a more precise control and better performances at higher speeds.